Nadella's Law

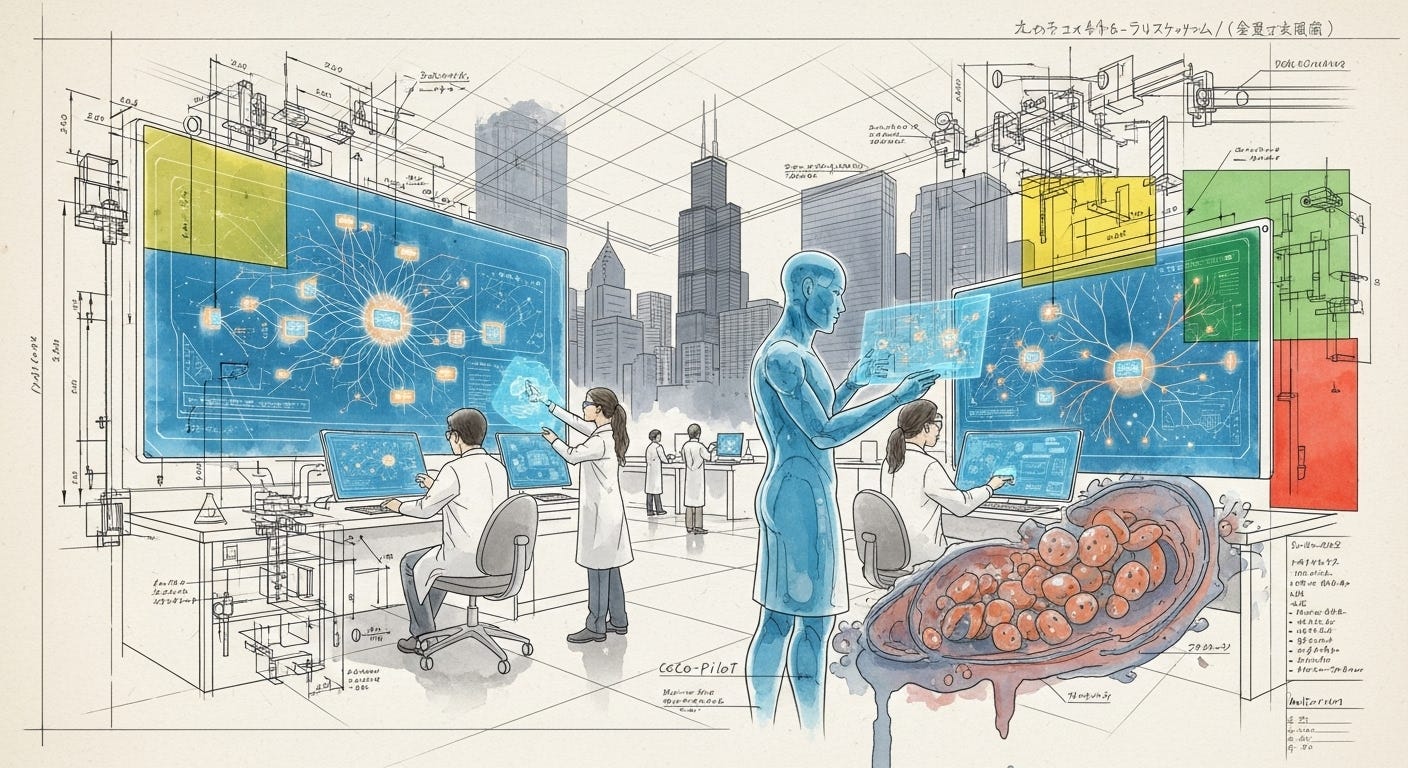

Why Nadella's Law is about to break the clinical trial model, and how to profit from the chaos.

The world of artificial intelligence is now accelerating at a pace that has its own name: "Nadella's Law." While Moore's Law famously saw computing power double every two years, Microsoft's CEO observed in 2024 that AI capabilities were doubling every six months. As Ian Bremmer notes in a recent TIME analysis, this isn't just a change in speed; it's a c…